Google AI Agents: Powering Search Defense with Smart Glasses

Google AI Agents: Powering Search Defense with Smart Glasses

Introduction: A New Era of AI-Powered Interaction

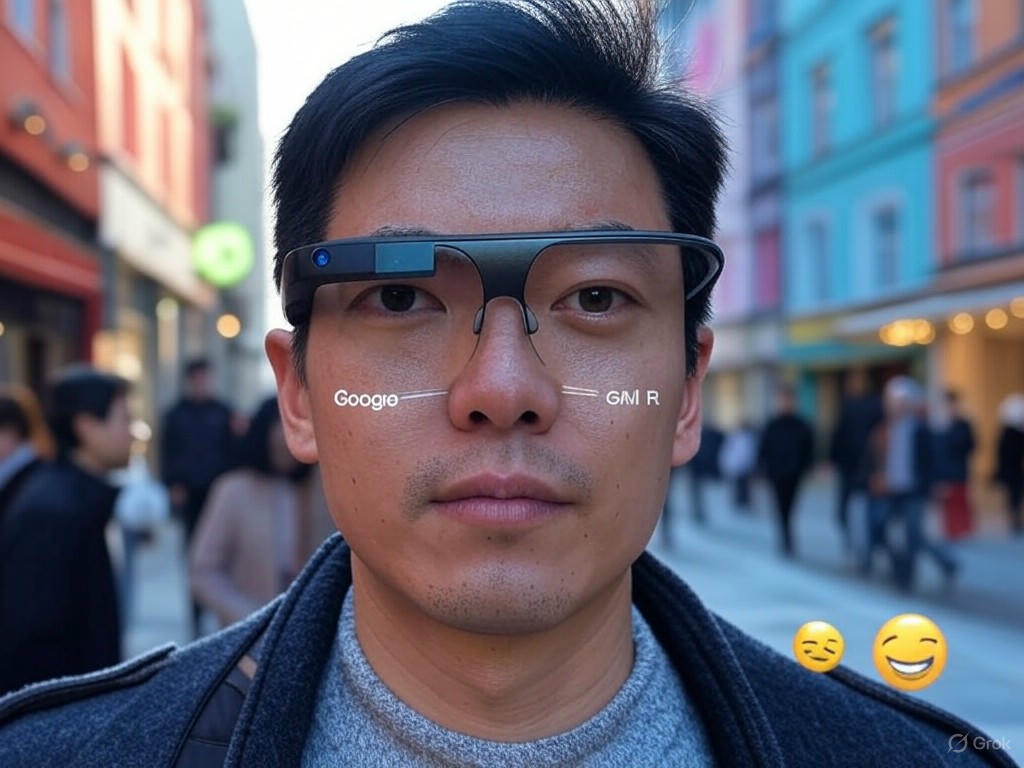

Imagine a world where your glasses do more than correct your vision—they answer your questions, guide you through a foreign city, and manage your day, all without lifting a finger. This is the future Google is crafting with its Google AI agents embedded in smart glasses, powered by the innovative Gemini AI. In this deep dive, we’ll uncover how these wearable wonders are shaping the future of search, personal assistance, and everyday life, while defending Google’s dominance in a fiercely competitive tech landscape.

The Rise of Smart Glasses as the Ultimate AI Platform

Smart glasses aren’t just a futuristic fantasy anymore—they’re becoming a tangible reality, and Google is leading the charge. Unlike smartphones that demand your attention with screens, Google AI agents within smart glasses offer a hands-free, seamless way to interact with the digital world. They’re packed with sensors, cameras, and microphones, capturing the context of your environment to deliver personalized, real-time assistance.

But why glasses? Think about it: they sit right on your face, always within reach, ready to project information directly into your field of view or whisper answers through discreet audio. Google’s vision is to make technology so intuitive that it feels like an extension of yourself, blending into your daily routine without the clunkiness of pulling out a device.

Why Now? Timing the Wearable Revolution

The timing couldn’t be better for smart glasses to take center stage. With wearable technology gaining traction—think smartwatches and earbuds—consumers are already primed for devices that integrate into their lives. Google’s partnerships with stylish eyewear brands like Warby Parker and Gentle Monster are a masterstroke, ensuring these glasses aren’t just functional but fashionable too. Who wouldn’t want a pair of prescription-ready, AI-powered frames that don’t scream “tech geek”?

- Hands-free interaction: Speak or gesture to get things done without interruption.

- Contextual awareness: Sensors understand your environment for tailored responses.

- Mainstream design: Sleek, everyday wearability thanks to top-tier brand collaborations.

Meet Gemini: The Brain Behind Google AI Agents

At the heart of Google’s smart glasses beats Gemini, an AI powerhouse that takes personal assistance to a whole new level. Unlike older voice assistants that struggled with complex tasks, Gemini combines cloud and on-device processing to handle intricate commands, real-time searches, and even visual recognition. Whether you’re asking for directions or identifying a landmark, Gemini makes it feel effortless.

I remember fumbling with my phone during a trip abroad, trying to translate a menu. With Gemini-powered glasses, I could’ve just looked at the text and heard the translation instantly. It’s not just convenient—it’s a game-changer for how we navigate the world.

Gemini’s Standout Features in Smart Glasses

- Contextual Search: Get answers based on where you are and what you’re looking at.

- Visual Recognition: Point at an object, and Gemini tells you what it is or where to buy it.

- Productivity Tools: Manage schedules, send messages, or set reminders hands-free.

- AR Overlays: See navigation cues or translated text right in your line of sight.

- Accessibility: Support for vision or hearing-impaired users with real-time assistance.

Defending Search with Google AI Agents in Wearables

Let’s talk about the bigger picture: Google’s core business is search, and it’s under siege from rivals like Meta and Apple. By embedding Google AI agents into smart glasses, the company isn’t just innovating—it’s fortifying its position. These glasses transform search from something you type into a browser to a dynamic, multimodal experience that happens wherever you are.

Picture this: you’re walking down a street, spot an interesting building, and with a quick voice command or glance, your glasses pull up its history, reviews, and nearby attractions. This isn’t just search; it’s search reimagined for a hands-free, context-aware world. Google’s betting that this seamless integration will keep users within its ecosystem, no matter how fierce the competition gets.

AI Mode and the Evolution of Search

Google’s new AI Mode in Search, paired with smart glasses, lets you chat with your AI agent, ask follow-up questions, and explore visual results. Features like Project Astra take this further by combining visual input, location data, and text to answer queries about your surroundings. It’s like having Google Image Search in real life—point your glasses at a product, and instantly get info on where to buy it or similar items.

- Chat-like interactions for a more natural search experience.

- Visual search capabilities that interpret the world around you.

- Shopping recommendations triggered by what you see in real-time.

Everyday Magic: What Smart Glasses Can Do for You

Beyond defending search, Google’s smart glasses powered by Google AI agents offer a treasure trove of practical uses. They’re not just for tech enthusiasts—they’re tools for anyone looking to simplify life. Whether you’re a busy professional, a traveler, or someone who just hates juggling devices, these glasses aim to be your ultimate sidekick.

Real-World Use Cases You’ll Love

Let’s break down how these glasses fit into daily life. Need directions? Turn-by-turn navigation appears right in your view, no phone needed. Struggling with a foreign sign? Real-time translation overlays the text in your language. It’s like having a personal assistant who’s always one step ahead.

- Navigation: Hands-free directions for walking, driving, or exploring.

- Translation: Instantly interpret speech or text in unfamiliar languages.

- Task Management: Add reminders or check schedules with a voice command.

- Communication: Send messages or take calls without touching a device.

- Media Control: Play music or access content with a simple gesture.

- Capture Moments: Snap photos or videos from your unique perspective.

Have you ever missed a moment because you were fumbling with your phone? With these glasses, capturing life as you see it becomes second nature, freeing you up to focus on the experience.

Accessibility: Tech That Includes Everyone

One of the most heartwarming aspects of this tech is its potential to empower people with disabilities. For those with vision or hearing impairments, Google AI agents in smart glasses can describe surroundings, amplify sounds, or provide audio cues. It’s a reminder that technology, when done right, can break down barriers and make the world more accessible for everyone.

Fashion Meets Function: Google’s Eyewear Partnerships

Let’s be honest—nobody wants to wear clunky, futuristic goggles that scream “I’m from a sci-fi movie.” Google gets this, which is why they’ve teamed up with brands like Warby Parker and Gentle Monster. The goal? Create smart glasses that look as good as they perform, with options for prescription lenses and styles that match your vibe.

These collaborations aren’t just about aesthetics. They tap into existing retail networks, making it easy to try on frames or get custom fittings at local stores. It’s a brilliant move to lower the barrier to entry and make AI wearables a part of everyday fashion.

Why These Partnerships Matter

- Retail Access: Try before you buy with in-store fittings and services.

- Design Innovation: Comfort and style meet cutting-edge tech.

- Consumer Trust: Familiar brands ease the transition to smart eyewear.

Google vs. the Competition: Who’s Winning the AI Glasses Race?

Google isn’t alone in this space—Meta’s Ray-Ban smart glasses and Apple’s rumored AR projects are hot on their heels. But Google’s approach with Google AI agents stands out thanks to its deep ecosystem integration, advanced multimodal AI, and developer-friendly platform. Let’s compare how they stack up against the competition.

| Feature | Google Smart Glasses | Meta Ray-Ban | Apple (Rumored) |

|---|---|---|---|

| AI Capabilities | Gemini (voice, vision, context) | Meta AI (mostly voice) | Siri or next-gen AI |

| AR Display | In-lens AR overlays possible | No AR display | Expected, details unclear |

| Developer Support | Open for third-party apps | Limited ecosystem | Unknown |

| Accessibility Focus | Strong assistive features | Basic audio support | Unknown |

What do you think—does Google’s head start with Gemini give it an edge, or are you holding out for Apple’s take on smart glasses? I’m curious to see how this rivalry plays out!

Privacy and Security: Addressing the Elephant in the Room

With great power comes great responsibility, and smart glasses raise valid concerns about privacy. Cameras and microphones constantly listening or watching? It sounds like a recipe for unease. Google will need to prioritize user consent, transparent data practices, and robust encryption to win trust. After all, no one wants their private moments accidentally recorded or shared.

There’s also the social angle—will people around you feel comfortable knowing your glasses might be capturing their image or voice? Google’s challenge isn’t just technical; it’s about navigating cultural and ethical boundaries to make this tech widely accepted.

Steps Google Might Take

- Clear opt-in policies for data collection and recording.

- Visible indicators (like a light) when cameras or mics are active.

- Encrypted, on-device processing to minimize cloud data risks.

The Road Ahead: When Can We Get Our Hands on These?

While Google hasn’t pinned down an exact release date, whispers in the tech world suggest we might see the first wave of Gemini-powered smart glasses as early as 2026. Developers are expected to gain access to software kits sooner, which means a thriving app ecosystem could be ready by launch day. This isn’t just a product—it’s the start of a broader platform for Google AI agents to evolve.

Future Possibilities to Watch

What’s next after the initial launch? I’d bet on deeper integration with health sensors—imagine your glasses tracking vitals or reminding you to drink water. Enterprise applications could also take off, with glasses aiding remote workers or field technicians. And let’s not forget education—students might one day use AR overlays to study anatomy or history in immersive ways.

- Health and fitness tracking with advanced biometrics.

- Enterprise solutions for hands-free workflows.

- Educational AR tools for interactive learning.

- Improved battery life and lighter, sleeker designs.

Challenges and Hurdles to Overcome

As exciting as this all sounds, Google faces a few bumps on the road. Battery life remains a big question—can these glasses last a full day with all those sensors and AR features running? Then there’s the cost. Early adopters might shell out premium prices, but for mass adoption, Google will need to make these accessible to a wider audience without sacrificing quality.

There’s also the learning curve. Not everyone’s used to voice commands or gesture controls, and some might find the idea of wearing AI on their face downright odd. Google will need clever onboarding and marketing to smooth out these wrinkles.

Potential Roadblocks

- Battery Constraints: Balancing power-hungry features with all-day use.

- Pricing: Keeping costs reasonable for mainstream buyers.

- User Adoption: Overcoming resistance to new interaction paradigms.

Conclusion: A Glimpse Into AI-First Living

The fusion of Google AI agents and smart glasses is more than a tech trend—it’s a glimpse into a future where digital assistance is woven into the very fabric of our lives. By redefining search and personal interaction, Google isn’t just defending its territory; it’s paving the way for a hands-free, context-rich world. Whether you’re navigating a new city, managing a packed schedule, or simply curious about what’s around you, these glasses promise to make life smarter, easier, and more connected.

I’m excited to see how this unfolds—are you? Drop your thoughts in the comments below, share this post with fellow tech enthusiasts, or check out our related articles on wearable tech and AI innovations. Let’s keep the conversation going!

Frequently Asked Questions

How Do Google AI Agents Enhance Smart Glasses?

Through Gemini, Google AI agents bring real-time search, visual recognition, navigation, and task management directly to your field of view or ear, making everyday tasks seamless and hands-free.

Will These Glasses Support Prescription Lenses?

Absolutely! Collaborations with brands like Warby Parker focus on integrating AI tech into frames that accommodate prescription needs, ensuring accessibility for all.

When Might We See Google’s Smart Glasses Launch?

While nothing’s official, industry insights point to a possible 2026 release, with developer tools rolling out earlier to build a robust app ecosystem.

What Makes Google’s Approach Unique Compared to Competitors?

Google’s strength lies in Gemini’s multimodal AI, AR capabilities, accessibility features, and an open platform for third-party apps, setting it apart from Meta’s simpler offerings and Apple’s unconfirmed plans.

Stay Updated

Want the latest on Google AI agents, smart glasses, and wearable tech breakthroughs? Subscribe to our newsletter or explore more of our blog for cutting-edge insights.

Sources

- Google Sees XR Smart Glasses as Ultimate Use for AI – CNET

- Google Unveils New AI Features to Defend Search Business – The Information

- Android XR Smart Glasses with Gemini Are Coming Soon – BGR

- Everything Google Unveiled at I/O 2025 – ZDNet

- Google Smart Glasses Overview – YouTube

- Gemini AI Features in Wearables – YouTube

- Google Offers AI Mode in Reimagining of Search – DefencePK

- Google AI and Smart Glasses Demo – YouTube